The most commonly considered parameter that tests vary on is scope (or sometimes referred to as “size”).

Scope is relative to a testable unit. A test that focuses on a single such unit is called a unit test, and a test that exercises several units together is called an integration test. Most tests can also be arranged in the testing pyramid.

A unit is arbitrarily defined from the test author’s perspective. For instance in object-oriented programming, a unit is typically a class. Throughout this document, and generally on Fuchsia, is is often mention ed that a unit under test is a component unless otherwise stated.

As you write tests for Fuchsia, you want to make sure that you are familiarized with the testing principles and the best practices for writing tests.

Scope of tests

Tests at different scope complement each other’s strengths and weaknesses. A good mix of tests can create a great testing plan that allows developers to make changes with confidence and catches real bugs quickly and efficiently.

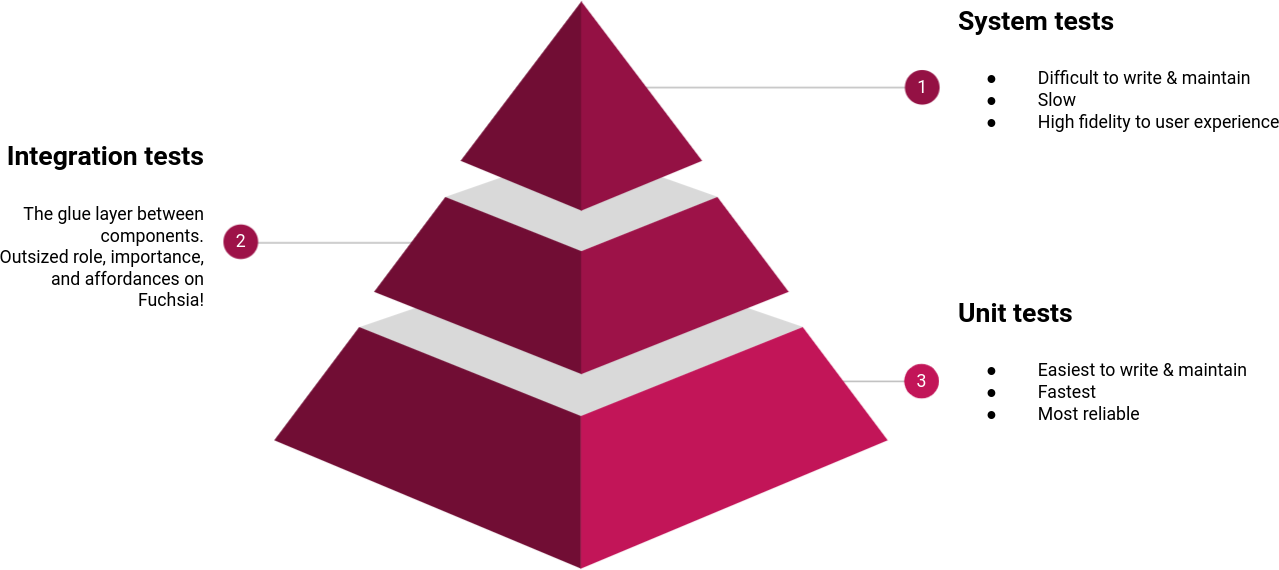

When considering what kind of tests to write and how many of each, imagine tests arranged as a pyramid:

Many software testing publications advocate for a mix of 70% unit tests, 20% integration tests, 10% system tests. Fuchsia recommends investing more in integration testing at the expense of other types of testing for the following reasons:

- Fuchsia emphasizes componentization of software. Applications and systems on Fuchsia tend to exercise the boundaries between components very extensively. Therefore we recommend that you test those boundaries.

- Fuchsia’s Component Framework makes it possible, sometimes easy, to reuse production components in tests, which lowers the cost of developing integration tests.

- Fuchsia’s testing runtime offers the same level of isolation for integration tests as it does for unit tests, making integration testing as reliable.

- Communication between components is orders of magnitude slower than a direct method call, but still pretty fast. Integration tests typically don’t run slower than unit tests to an extent that is perceptible by a human developer.

- There already exist many integration tests on Fuchsia that serve as useful examples.

For more information on the various tests from the testing pyramid in Fuchsia:

There are also specialized tests that fall outside of the testing pyramid. For more information, see specialized testing.

See also:

Unit tests

On Fuchsia, tests that are fully defined in a single component are called unit tests.

Unit tests can run in an isolated realm that serves as their sandbox. This keeps tests from acquiring unexpected capabilities, from being influenced by system state outside the intended scope of the test, and from influencing other tests that are running in parallel or in sequence on the same device.

Unit tests are controlled by direct invocations of code within the body of the

test. For instance,

audio_effects_example_test.cc exercises code

in a loadable module that implements audio effects and validates that the code

works.

In a healthy testing pyramid, unit tests are the broad base for testing because unit tests offer the highest degree of isolation and are the fastest tests, which in turn produces very reliable and useful positive signals (tests pass) and negative signals (the test failed with an actionable error indicating a real defect). Unit tests also produce the most actionable test coverage information, and enjoy other fringe benefits such as when run in combination with sanitizers. Therefore any testing needs that can be met with unit tests should be met with unit tests.

A unit test is said to be hermetic if only the contents of the test’s package influence whether the test passes or fails.

See also:

Integration tests

On Fuchsia, tests that span multiple components that are delivered in a single test package are called integration tests.

In a healthy testing pyramid, integration tests are the next most common types of tests after unit tests. Integration tests on Fuchsia are typically fast, though not as fast as unit tests. Integration tests on Fuchsia are isolated from outside effects from the rest of the system, same as unit tests. Integration tests are more complex than unit tests to define and drive. Therefore any testing needs that can’t be met with unit tests but can be met with integration tests should be met with integration tests.

Integration testing has an outsized role on Fuchsia compared to other platforms. This is because Fuchsia encourages software developers to decompose their code into multiple components in order to meet the platform’s security and updatability goals, and then offers robust inter-component communication mechanisms such as channels and FIDL.

In an integration test, an isolated

realm

is created similarly

to a unit test, but then multiple components are staged in that realm and

interact with each other. The test is driven by one of the components in the

test realm, which also determines whether the test passed or failed and provides

additional diagnostics. For instance, the

font server integration tests test a font resolver

component that exposes the

fuchsia.pkg.FontResolver FIDL protocol as a

protocol capability, and then validate that component

by invoking the methods in its contract with clients and validating expected

responses and state transitions.

Integration tests are controlled by the test driver. The test driver sets up one or more components under test, exercises them, and observes the outcomes such as by querying the state of those components using their supported interfaces and other contracts.

Within the test realm, standard capability routing works exactly the same way as it does in any other realm. In test realms, capability routing is often used as a mechanism for dependency injection. For instance a component under test that has a dependency on a capability provided by another component that’s outside the test scope may be tested against a different component (a “test double”), or against a separate instance of the production component that is running in isolation inside the test realm.

An integration test is said to be hermetic if only the contents of the test’s package influence whether the test passes or fails.

See also:

- Complex topologies and integration testing: How to statically define a test realm with multiple components and route capabilities between them.

- Realm builder: An integration testing helper library for runtime construction of test realms and mocking components in individual test cases.

System tests

On Fuchsia, tests that aren’t scoped to the contents of a single package and of one or more components contained within are called system tests. The scope of such tests is not strictly defined in terms of components or packages, and may span up to and including the entire system as built in a given configuration.

System tests are sometimes referred to as Critical User Journey (CUJ) tests or End-To-End (E2E) tests. Some might say that such tests are E2E if the scope is even greater than a single Fuchsia device, for instance when testing against a remote server or when testing in the presence of specific wifi access points or other connected hardware.

In a healthy testing pyramid, system tests are the narrow tip of the pyramid, indicating that there are fewer system tests than there are tests in other scopes. System tests are typically far slower than unit tests and integration tests because they exercise more code and have more dependencies, many of which are implicitly defined rather than explicitly stated. System tests don’t offer an intrinsic degree of isolation and are subject to more side effects or unanticipated interference, and as a result they are typically less reliable than other types of tests. Because the scope of code that’s exercised by system tests is undefined, Fuchsia’s CI/CQ doesn’t collect test coverage from E2E tests (collecting coverage would yield unstable or flaky results). E2E configurations are not supported by sanitizers for similar reasons. For this reason you should write system tests when their marginal benefits cannot be gained by unit tests or integration tests.

Additional testing

There are various specialized tests in Fuchsia:

Kernel tests

Testing the kernel deserves special attention because of the unique requirements and constraints that are involved. Tests for the kernel cover the kernel’s surface: system calls, kernel mechanics such as handle rights or signaling objects, the vDSO ABI, and userspace bootstrapping, as well as any important implementation details.

Most tests for the kernel run in user space and test the kernel APIs from the

outside. These are called core usermode tests. For instance,

TimersTest tests the kernel’s timer APIs. These tests are more

difficult to write than regular tests: only certain programming languages are

supported, there are weaker isolation boundaries between tests, tests can’t run

in parallel, it’s not possible to update the tests without rebooting and

repaving, and other constraints and limitations apply. These tradeoffs allow the

tests to make fewer assumptions about their runtime environment, which means

that they’re runnable even in the most basic build configurations

(bringup builds) without the component framework or advanced

network features. These tests are built into the

zbi

. It’s possible to run these tests such

that no other programs run in usermode, which helps isolate kernel bugs.

Some kernel behaviors cannot be tested from the outside, so they’re tested with

code that compiles into the kernel image and runs in kernelspace. These tests

are even more difficult to write and to troubleshoot, but have the most access

to kernel internals, and can be run even when userspace bootstrapping is broken.

This is sometimes necessary for testing. For instance,

timer_tests.cc tests timer deadlines, which cannot be tested

precisely just by exercising kernel APIs from userspace because the kernel

reserves some slack in how it may coalesce timers set from

userspace.

Compatibility tests

Compatibility tests, sometimes known as conformance tests, extend the notion of correctness to meeting a given specification and complying with it over time. A compatibility test is expressed in certain contractual terms and can be run against more than one implementation of that contract, to ensure that any number of implementations are compatible.

For example:

- The Compatibility Tests for Fuchsia (CTF) are tests that validate Fuchsia’s system interface. CTF tests can run against different versions of Fuchsia, to ensure that they remain in compatibility or to detect breaking changes. In the fullness of time, CTF tests will cover the entirety of the surface of the Fuchsia SDK.

- FIDL uses a specific binary format (or wire format) to encode FIDL messages that are exchanged between components over channels. FIDL client-side and server-side bindings and libraries exist in many languages such as C, C++, Rust, Dart, and Go. The FIDL compatibility tests and Golden FIDL (GIDL) tests ensure that the different implementations are binary-compatible, so that client and servers can talk to each other correctly and consistently regardless of their developer’s choice of programming language.

- Fuchsia Inspect uses a certain binary format to encode diagnostics information. Inspect is offered in multiple languages and the underlying binary format may change over time. Inspect validator tests ensure binary-compatibility between all reader/writer implementations.

Performance tests

Performance tests exercise a certain workload, such as a synthetic benchmark or a CUJ, and measure performance metrics such as time to completion or resource usage during the test.

A performance test may ensure that a certain threshold is met (for instance frames render at a consistent rate of 60 FPS), or they may simply collect the performance information and present it as their result.

For instance:

- Many performance tests and performance testing utilities can be found in the end-to-end performance tests directory. These cover a range of targets such as kernel boot time, touch input latency, Flutter rendering performance, and various micro-benchmarks for performance-sensitive code.

- Perfcompare is a framework for comparing the results of a given benchmark before and after a given change. It’s useful for measuring performance improvements or regressions.

- FIDL benchmarks have various-sized benchmarks for FIDL generated code.

- Netstack benchmarks test the network stack’s performance using micro-benchmarks for hot code.

Stress and longevity tests

Stress tests exercise a system in ways that amount to exceptionally high load, but are otherwise expected within the system's contract. Their goal is to demonstrate the system's resilience to stress, or to expose bugs that only happen under stress.

In a stress test, the system under test is isolated from the test workload components. Test workload components can do whatever they wish, including crashing without bringing down the system under test.

For instance, the minfs stress tests performs many operations on a MinFS partition in repetition. The test can verify that the operations succeed, that their outcomes are observed as expected, and that MinFS doesn’t crash or otherwise exhibit errors such as filesystem corruption.

Tests that intentionally exercise a system for a long duration of time are a type of stress test known as a longevity test. In a longevity test, the span of time is intrinsic to the load or stress on the system. For instance a test where a system goes through a user journey 10,000 times successfully is a stress test, whereas a test where a system goes through the same user journey in a loop for 20 hours and remains correct and responsive is also a longevity test.

See also:

Fuzzing

Fuzzers are programs that attempt to produce valid interactions with the code under test that will result in an erroneous condition, such as a crash. Fuzzing is directed by pseudorandom behavior, or sometimes through instrumentation (seeking inputs that cover new code paths) or other informed strategies such as pregenerated dictionaries or machine learning.

Experience has shown that fuzzers can find unique, high-severity bugs. For

instance reader_fuzzer.cc found bugs in Inspect reader code

that would result in a crash at runtime, even though this code was already

subjected to extensive testing and sanitizers.

See also: